5 Creative uses for Robots.txt

A robots.txt file is a text file that tells search engine robots which URLs they can access on your website. This is used mainly to avoid overloading your site with requests; it is not a mechanism for keeping a web page out of engines - to do that effectively you would need to completely block the page(s).

Check out my SEO course

For example, you can use robots.txt files to prevent duplicate content from appearing in search results. A robots.txt files can also be used to keep internal search results pages from being crawled by search engine bots. It is not absolutely required, but it is certainly good to have.

One of the very first technical SEO fires I ever had to extinguish was one started by a robots.txt file. The startup where I was working launched a brand-new redesigned website, and we were excited to see the results. However, instead of traffic going up it inexplicably went down. For anyone that has ever had the misfortune of screwing up a robots file (spoiler alert that’s what happened), you will know that a robots noindex directive is not an instant kiss of search death – it’s more like a slow drawn-out slide especially on a large site.

Since I had never seen anything like this before, I was frantically checking everything else that might cause this slow dive. While I turned over every rock to find the issue, I chalked it up to bad engineering, an algorithm update, or a penalty. Finally, I found a clue. A URL where I thought a ranking had slipped was actually no longer indexed. Then I found another URL like that and another one.

Only then did I check the robots.txt and discovered that it was set to noindex the entire site. When the brand-new site had been pushed live – everything had moved from the staging site to production including the robots file which had been set to noindex that staging site. When Googlebot revisited the homepage next, it fetched the robots.txt and saw the noindex directive. Ever since that experience I have had a healthy fear of robots files but that doesn’t mean they can’t be useful and fun.

Make robots files useful

Don't just think of a robots.txt as a file that you are going to use to block search engines from crawling pages on your site, there are creative uses for this file if you just allow yourself to think outside the box.

Note that if you do any of these you should add a comment tag in front of any non-code so you don’t inadvertently break your robots file. These are just examples of what you can do with a robots file once you start being creative.

Recruiting:

Use robots files to advertise open roles. The only humans that are ever going to look at a robots file are either search engine engineers or search marketers. This would be a great place to grab their attention and encourage them to apply. For an example of this, check out Tripadvisor:

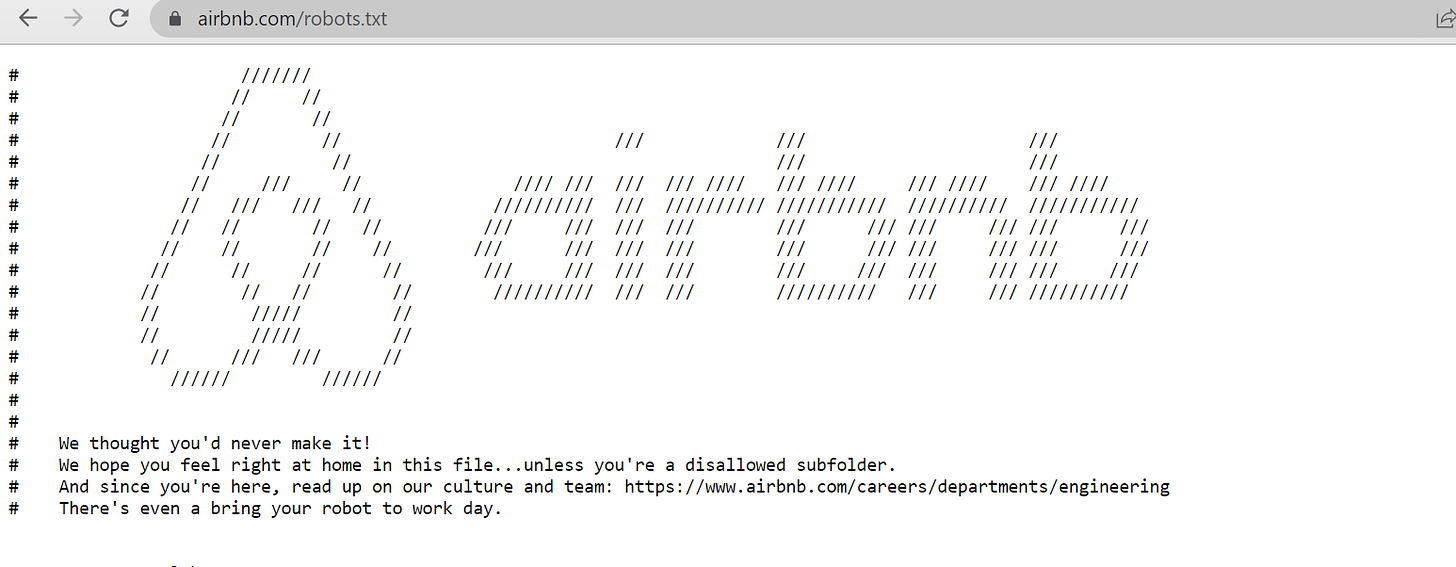

Branding

Showcase something fun about your brand like Airbnb does. They write out their brand name in ASCII code which reflects their design sense and make a casual reference to careers at the company.

Mystery

Anything that is in a robots file as disallowed that appears to be a traditional file (and not a reference to scripts) will inevitably be something that people will want to check out if they find it. If you produce a technical tool, this might be the place where you can offer a discount to only the people that find the link in the robots file with a URL like “secret-discount”. If someone goes to the lengths to explore your robots file, they are most definitely going to navigate to a page that references a secret discount!

Subterfuge

Any good marketer should always check out their competitors robots.txt files to see if there is anything that the competition is doing that you should keep track of. Assume that your competitors are going to check out your robots file and do the same to you. This is where you can be sneaky by dropping links and folders that don’t exist.

Would you love to do a rebrand but can’t afford it? Your competitors don’t need to know that! Put a disallow to a folder called /rebrand -assets.

For added fun you can even put a date on it, so the competition might mark their calendars for an event that never happens. When you start being creative along these lines, the ideas are truly endless. You can make references to events you won’t be having, job descriptions you aren’t releasing or even products with really cool names that will never exist.

Just make sure you block anyone from seeing a real page with either password protection or a 404, so this does remain just a reference to an idea.

Taxonomy

A robots file really just exists to disallow folders and files not to allow them. An exercise where you sit down with content or engineering to add folders to be allowed might be a good way to find out if there are folders that should not exist. Truthfully, the value in this is just the exercise but you can carry it forward and actually lay out all the allowed folders in the robots file as a way of detailing the taxonomy.

Have fun

There is a lot you can do with this boring file if you think of it as another asset or channel on your website.